IT Infrastructure Evolution: A Journey Through History

The evolution of technology architecture has been crucial in the development and optimization of IT infrastructures. We explore the transition from dedicated servers to virtualization and containerization.

The evolution of technology architecture has been crucial in the development and optimization of IT infrastructures. In recent decades, we have witnessed the transition from dedicated servers to virtualization and containerization. Each of these stages has brought significant improvements in efficiency, flexibility, and scalability, enabling the applications and services that businesses need to thrive in the digital era.

Understanding this evolution is key to maintaining competitiveness and anticipating future challenges.

Dedicated Servers

The Pillars of Traditional Infrastructure

“The right server for the right job.” - Bob Muglia

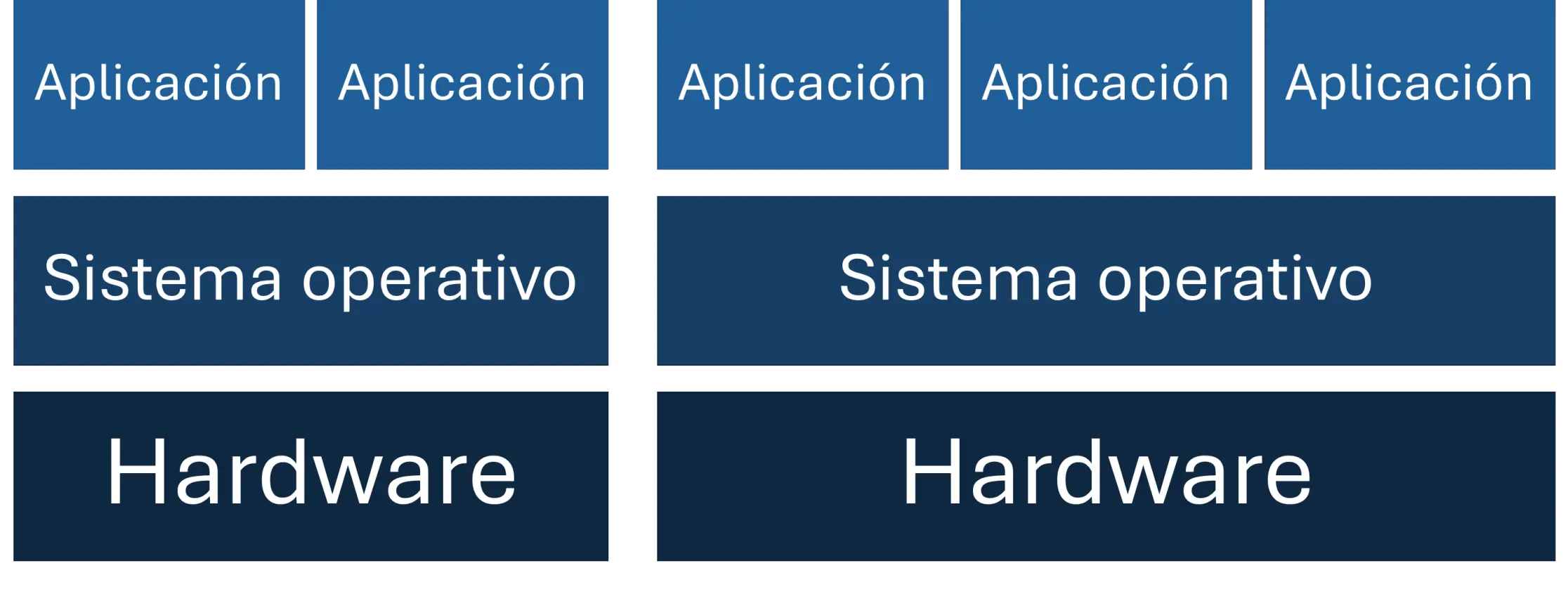

During the early years of enterprise computing, dedicated servers were the first option available to businesses. These physical servers were used to host critical applications, databases, and web services, where each application or service operated on an exclusive physical server, providing a high level of performance and reliability. The dedicated server architecture allowed companies to have total control over their resources, which was essential for maintaining a secure and predictable work environment.

Figure 1. Traditional dedicated server architecture diagram

Figure 1. Traditional dedicated server architecture diagram

Advantages

-

Performance and stability: Being reserved exclusively for a single application or service, they provide an optimized environment that maximizes performance and allows for a consistent and predictable experience.

-

High level of security: Dedicated servers offer total physical isolation, meaning that all machine resources are exclusive to a single client or user.

-

Total hardware control: Exclusive hardware control is a critical advantage for many companies, as it allows deep customization in server configuration. You can choose the processor, memory, storage, and network most suitable for the company’s needs, ensuring sizing aligned with its specific objectives.

Disadvantages

-

High costs: The initial investment to acquire physical servers and ongoing maintenance represents a significant barrier for many companies. In addition to hardware costs, expenses related to physical space are also incurred, whether in a data center or on-site.

-

Limited scalability: More hardware must be acquired when greater capacity is required, which is not only expensive but also slow. Additionally, it is not possible to dynamically adjust resources according to fluctuating demands.

-

Availability limitations: Ensuring high availability was a challenge, as the infrastructure depended on single physical servers. Hardware failures could result in prolonged downtime, and implementing redundancy was costly.

Although dedicated servers remain a valid solution in certain situations, especially for applications requiring high performance and segmentation, their limitations in terms of costs and scalability impose the search for more efficient alternatives.

Virtualization

Maximizing Utilization and Flexibility

“Virtualization is like a Swiss army knife. You can use it for…” - Raghu Raghuram

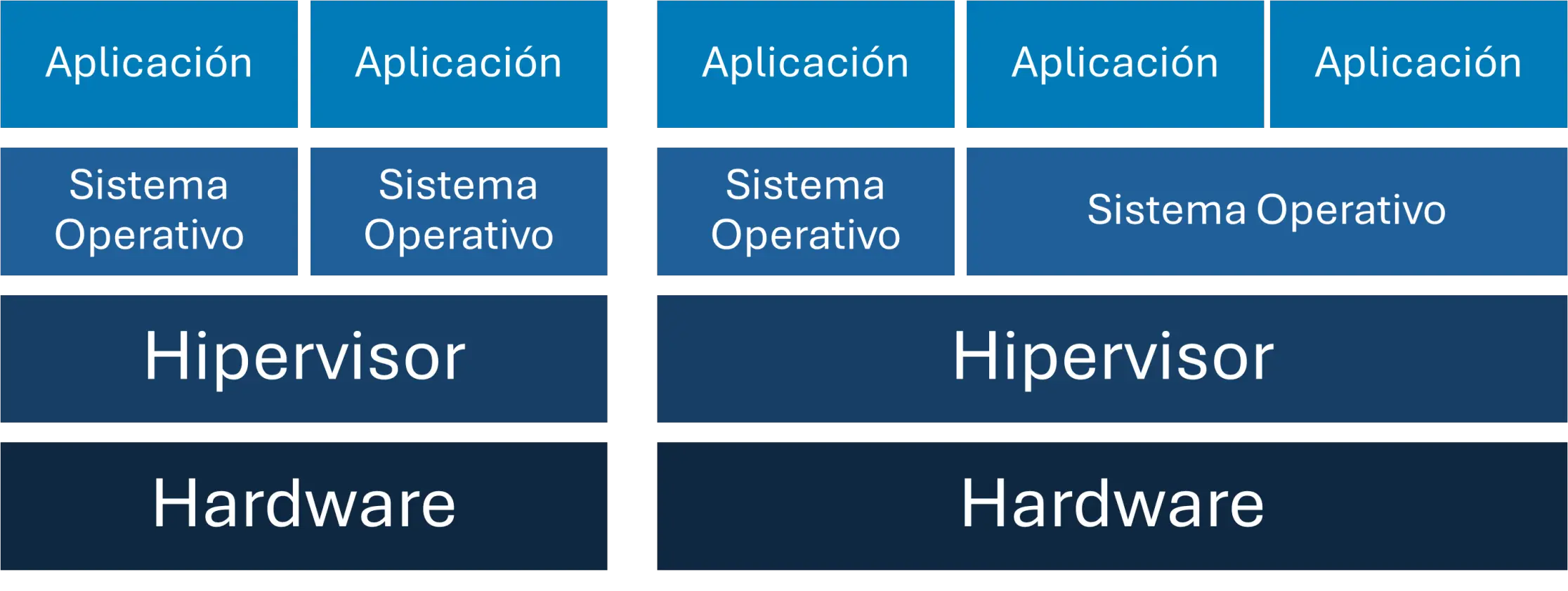

Virtualization (in x86 architecture), commercially introduced by VMware in the late 1990s, revolutionized hardware resource management by allowing multiple virtual machines (VMs) to run on a single physical server. Software called a hypervisor is used, which sits between the physical hardware and operating systems, managing and distributing resources among VMs, so that each one functions as if it had its own dedicated hardware. This improved resource utilization and significantly reduced operational costs.

Commercial hypervisors such as VMware ESXi, Citrix XenServer, and Microsoft Hyper-V enabled companies to consolidate servers, improve redundancy, and simplify hardware management. Likewise, open-source technologies such as KVM (Kernel-based Virtual Machine) and management platforms like Proxmox have played a crucial role in the democratization of virtualization.

Figure 2. Virtualization-based architecture diagram

Figure 2. Virtualization-based architecture diagram

Advantages

-

Resource optimization: Virtualization allows running multiple virtual machines (VMs) on a single server, making the most of available hardware, which reduces costs and improves efficiency.

-

Scalability: It is possible to create, clone, or move VMs easily, as well as expand or reduce their resources according to the changing needs of the company.

-

Resilience: Virtualization platforms offer automatic recovery and high availability, minimizing downtime by moving workloads between servers in case of failure.

Disadvantages

-

Hypervisor dependency: Although minimal, performance can be affected due to the additional hypervisor layer, which manages resources between VMs.

-

Resource overload: While it optimizes hardware, running multiple VMs on a single server can overload resources and affect performance if the infrastructure is not adequately sized.

-

Shared security: The underlying environment is shared among VMs, which can be a risk in case of hypervisor vulnerabilities, although VMs are isolated from each other.

Virtualization paved the way for the development of private, public, and hybrid cloud infrastructures, where IT resources are dynamically scalable according to demand.

Containerization

The Revolution of Portability and Scalability

“Our industry does not respect tradition, it only respects…” - Satya Nadella

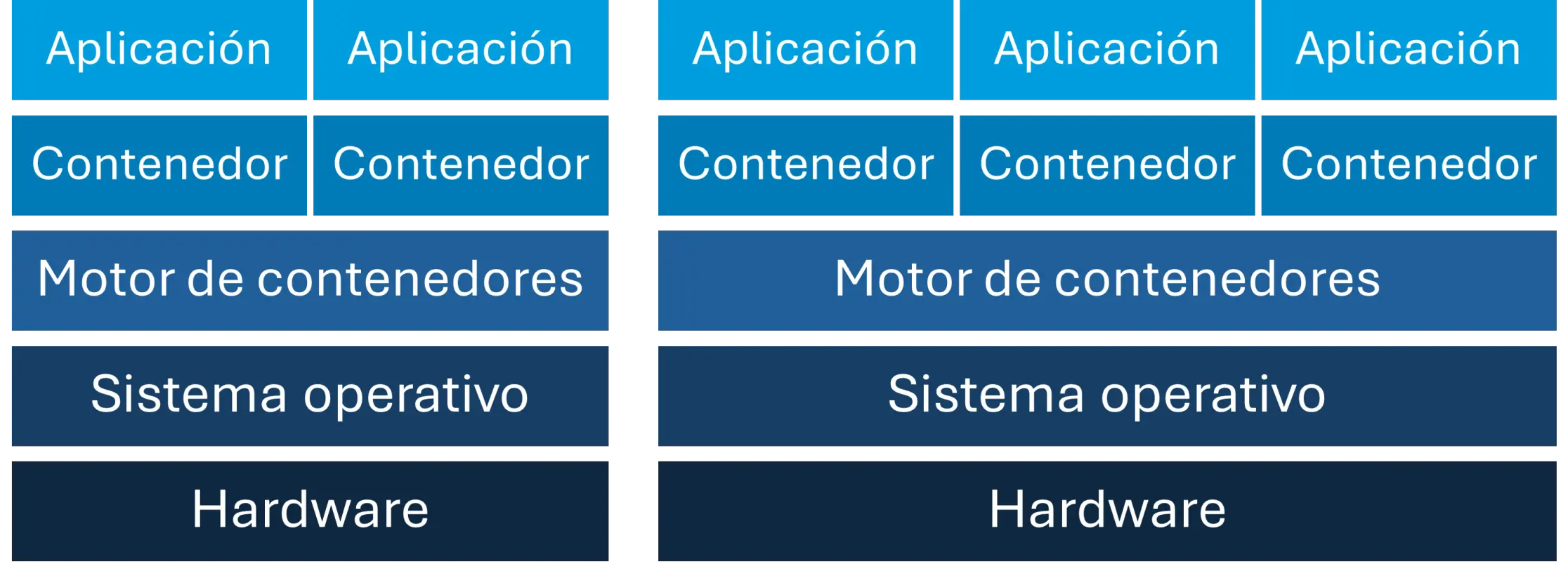

The next major advancement in technology infrastructure is containerization, popularized by Docker in the mid-2010s. Containerization has significantly transformed the way modern applications are developed, deployed, and managed.

Containers work through the creation of an image, which contains the application and all its dependencies. This image acts as an immutable template that can be used to generate container instances. When running the application, the container is instantiated from the image stored in a container registry and runs in an isolated environment using the host operating system’s resources. Isolation and resource control are achieved through operating system features, such as namespaces and cgroups, ensuring that each container operates independently, even on the same server.

Kubernetes, a container orchestration platform initially designed by Google, has taken container management to a new level, allowing deployment, management, and scaling of containerized applications in distributed clusters with ease. Kubernetes not only automates application deployment and scaling but also manages failure recovery, load balancing, and continuous monitoring, ensuring optimal performance and high availability.

Figure 3. Container-based architecture diagram

Figure 3. Container-based architecture diagram

Advantages

-

Efficiency: Containers are significantly lighter than virtual machines, as they share the same underlying operating system kernel, allowing applications to start and stop quickly.

-

Portability: Containers are easy to deploy in different development, testing, and production environments, simplifying implementation across multiple platforms.

-

Horizontal scalability: Containers can be replicated and scaled quickly, allowing efficient handling of demand peaks.

Disadvantages

-

Limited isolation: Unlike VMs, containers share the same kernel as the host operating system, which can represent a security risk if a container compromises the system.

-

Complex data persistence: Data management in containers can be challenging, especially in ephemeral container architectures that don’t maintain long-term data.

-

Management complexity: Managing large volumes of containers can become complex, requiring orchestration tools (like Kubernetes) and advanced technical skills.

The adoption of containers has driven the popularity of microservices, an architectural approach that divides complex applications into small independent services, facilitating their development, deployment, and scalability.

Looking Towards the Future

“The best way to predict the future is to invent it.” - Alan Kay

As we move forward, the convergence of these IT infrastructure paradigms, along with the incorporation of emerging technologies such as artificial intelligence and edge computing, is redefining infrastructure standards in the digital era, driving the creation of more agile, intelligent systems capable of rapidly adapting to higher-demand environments.

With artificial intelligence, IT infrastructure systems will be able to anticipate needs and adapt to new workloads without human intervention. Similarly, edge computing will allow data to be processed closer to the source, improving latency and optimizing network usage.

Figure 4. IT Infrastructure Innovation: The Convergence of Intelligence and Technology

Figure 4. IT Infrastructure Innovation: The Convergence of Intelligence and Technology

Technological innovation is not just a competitive advantage but a necessity for any organization aspiring to lead in its sector. Those companies that adopt these technologies and optimize their infrastructure will be better prepared to seize future opportunities and respond to the challenges posed by digital transformation. With this approach, the evolution of technology architectures will continue to be the engine driving organizational growth and resilience in the years to come.

Need help modernizing your infrastructure? Contact me for an assessment of your current environment.